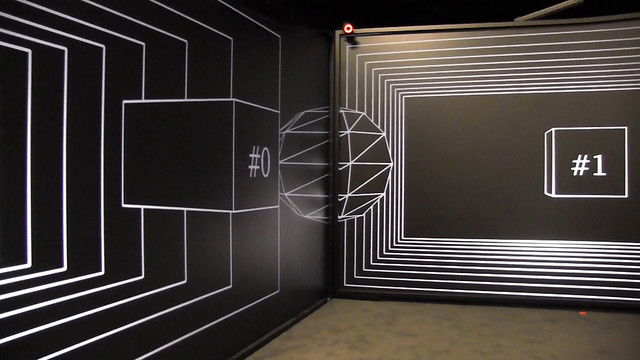

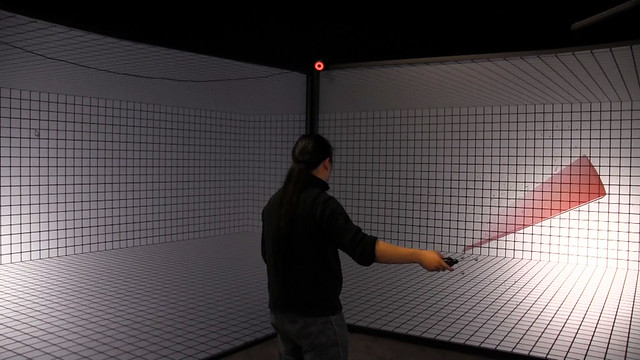

Perspective Tracking in Triple Screens CAVE

|

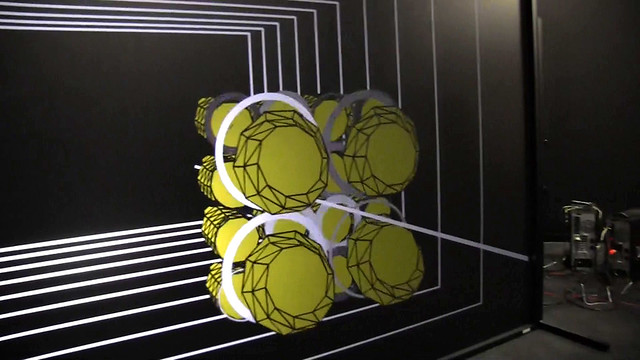

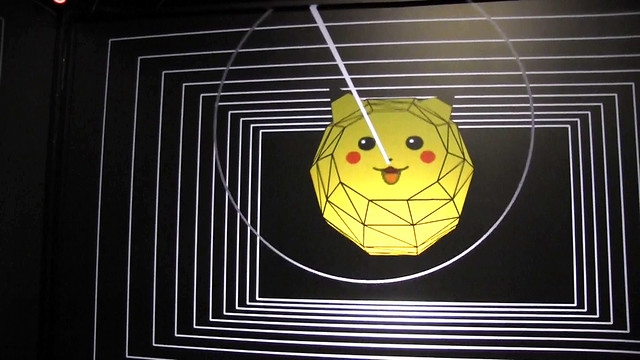

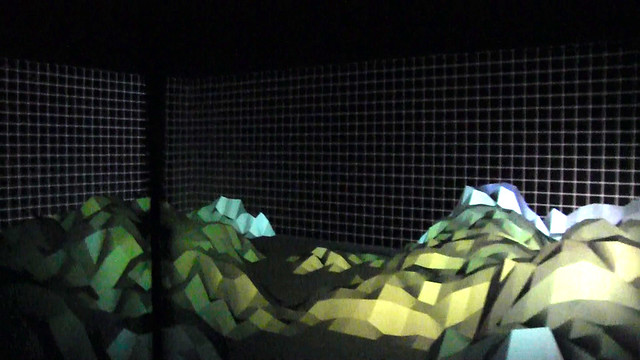

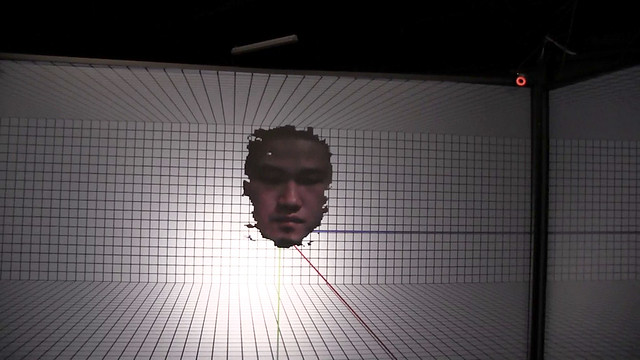

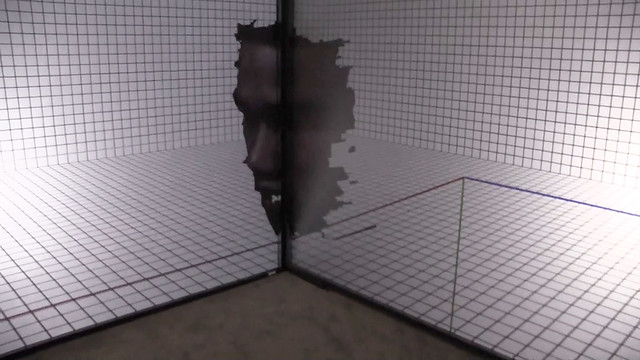

Created between Mar.2015 and Apr.2015 As continuation of a series of one-screen perspective tracking experiments (Portal 2.0, Contra Base 1, Doge Chorus, etc.) I did in late 2014, Shawn Lawson and I changed the lab layout into a three-screens box CAVE environment late last month. This video documentation covers multiple new demos I’ve developed for the new environment in the past couple of weeks. 针对中国用户,若Vimeo 视频无法显示,可以观看下方MANA版本。 Each screen is attached perpendicularly to its neighbor(s), aligned with its corresponding rear projection. Four Vicon Bonita cameras on top corners cover the central area surrounded by the screens. The origin point locates at center of the central area. Data collected by Vicon is sent through VRPN (Virtual-Reality Peripheral Network), then OSC (Open Sound Control) protocol to Processing, and is mapped as frustum parameters to compute three projection matrices, which coincide with the screens’ position in reality. Demo 2: Pikachu Quadtree is a rehash of my quick sketch Pikaworm in 2013. The real-time sound effect in Demo 4: Lightsaber is created through the overlap of a sine wave and a sawtooth wave generated using Beads. The floating face in Demo 5: Zordon is a 3D reconstruction based on the oni file data recorded using Kinect and SimpleOpenNI.                    |

Pingback: MEETING3 – Environments in (e)motion